Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

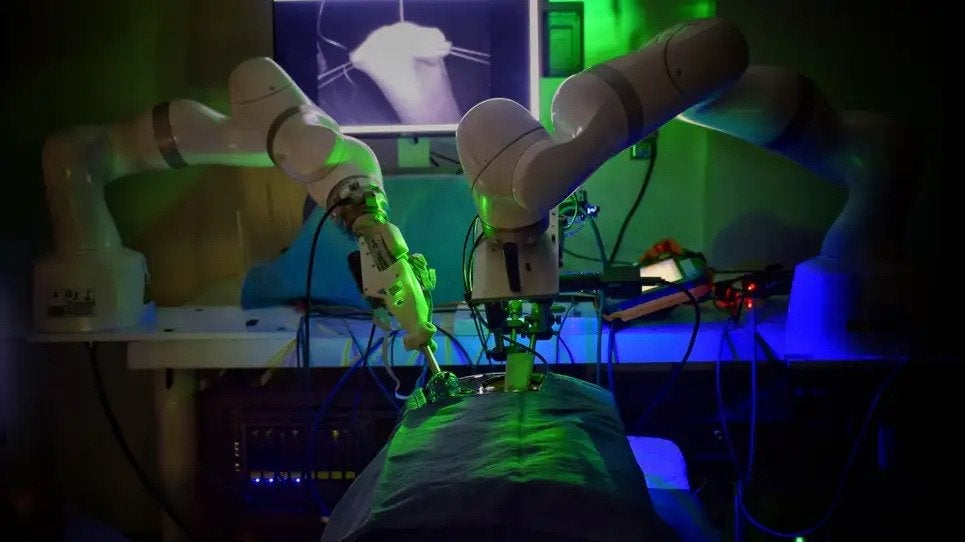

The AI boom is already beginning to enter the medical field in the form of AI-powered visit summaries and patient condition analysis. Now, new research demonstrates how AI training techniques similar to those used for ChatGPT can be used to train surgical robots to operate on their own.

Researchers from Johns Hopkins University and Stanford University developed a training model using video recordings of human-controlled robotic arms performing surgical tasks. By learning to mimic the movements in the video, the researchers believe they can reduce the need to program each individual movement required for the procedure. From The Washington Post:

The robots have learned to manipulate needles, tie knots and sew wounds on their own. Moreover, the trained robots went beyond mere imitation, correcting their own slips without prompting—for example, by picking up a dropped needle. Scientists have already begun the next phase of the work: combining all the different skills in a complete operation on animal carcasses.

To be sure, robotics have been used in the operating room for years – in 2018, the “surgery on the grapevine” meme highlighted how robotic arms can aid surgeries by providing a high level of precision. Approximately 876,000 robot-assisted transactions It was conducted in 2020. Robotic instruments can reach places and perform tasks in the body that a surgeon’s hand would never reach, and they don’t suffer from vibrations. Fine, precise instruments can save nerve damage. But robotics is usually manually controlled by a surgeon with a controller. The surgeon is always responsible.

The concern of skeptics of more autonomous robots is that AI models like ChatGPT aren’t “intelligent,” they’re just imitating what they’ve seen before and don’t understand the basic concepts they’re dealing with. The infinite variety in the myriad variety of human hosts poses a problem, so what if the AI model hadn’t seen a particular scenario before? What if something could go wrong during an operation in a split second and the AI isn’t trained to respond?

At a minimum, autonomous robots used in surgery must be approved by the Food and Drug Administration. Other cases where doctors use AI to summarize patient visits and make recommendations do not require FDA approval, as the doctor would technically have to review and approve any information they produce. This is because there is already evidence that there will be AI bots give bad adviceor hallucinate and insert information into meeting transcripts that was never spoken. How often does a tired, overworked doctor stamp out everything an AI produces without carefully checking it?

This reminds me of recent reports about the condition of soldiers in Israel relying on artificial intelligence to identify attack targets without examining the data very carefully. “Soldiers poorly trained to use the technology (AI) attacked human targets without any confirmation of their predictions,” he said. The Washington Post the story is reading “At certain times, the only verification required was that the target was male.” Things can go wrong when people are complacent and not in the loop enough.

Healthcare is another area with a high stake – certainly higher than the consumer market. If Gmail summarizes an email incorrectly, it’s not the end of the world. A more serious problem is when AI systems misdiagnose a health problem or make an error during surgery. Who is responsible in this case? The Post In an interview with the director of robotic surgery at the University of Miami, he said:

He said: “Because it is a matter of life and death.” The anatomy of each patient, the behavior of a disease in patients is also different.

“I look at CT scans and MRIs and then operate,” Parekh said. “If you want a robot to do the surgery itself, it needs to understand how to read all the images, CT scans and MRIs.” Additionally, robots must learn how to perform laparoscopic surgery, which uses a keyhole or very small incisions.

While no technology is perfect, it’s hard to take seriously the idea that artificial intelligence will ever be flawless. Certainly this autonomous technology is interesting from a research perspective, but the fallout from a botched surgery performed by an autonomous robot would be monumental. Who do you punish when something goes wrong, whose medical license is revoked? People aren’t infallible either, but at least patients have the comfort of knowing they’ve been trained for years and can be held accountable if something goes wrong. AI models are crude simulators of humans, sometimes behaving erratically and with no moral compass.

If doctors are tired and overworked—one reason the researchers suggest why this technology could be valuable—perhaps the systemic problems causing the shortages need to be addressed. It is widely believed that the United States is experiencing an extreme physician shortage due to a physician shortage increasing the unavailability of the field. The country will face a shortage of 10-20 thousand surgeons by 2036. Association of American Medical Colleges.