Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Nvidia It is scheduled to inform the financial results of the fourth quarter on Wednesday after the bell.

It is expected to give the final touches to one of the most notable years of a large company. Analysts surveyed by FACTSET expect $ 38 billion in sales for the quarter ending in January, which would be an increase of 72% annually.

The January quarter will limit the second fiscal year where Nvidia sales doubled. It is an impressive streak driven by the fact that the graphics processing units of the NVIDIA Data Center, or GPU, are essential hardware to build and implement artificial intelligence services such as OpenAI Chatgpt services. In the last two years, Nvidia shares have increased 478%, so it is the most valuable American company sometimes with a market capitalization of more than $ 3 billion.

But Nvidia’s shares have slowed in recent months as investors question where the chips company can range from here.

It is quoted at the same price as last October, and investors distrust any sign that Nvidia’s most important customers could be hardesting their belts after years of large capital expenses. This is particularly worrying following the recent advances in the outside of China.

A large part of Nvidia’s sales go to a handful of companies that build mass servers farms, usually to rent to other companies. These companies in the cloud are usually called “hyperscalers”. Last February, Nvidia said a single client represented 19% of his total income in fiscal year 2024.

Morgan Stanley analysts estimated this month that Microsoft It will represent almost 35% of the expenditure in 2025 in Blackwell, the last Nvidia AI chip. Google is 32.2%, Oracle with 7.4% and Amazon to 6.2%.

This is the reason why any sign that Microsoft or its rivals could withdraw the expense plans can shake Nvidia shares.

Last week, TD Cowen analysts said they had learned that Microsoft had canceled leases with private data centers operators, decelerated their negotiation process to enter new leases and adjusted plans to spend in international data centers in favor of American facilities.

The report increased fears on the sustainability of the growth of AI infrastructure. That could mean less demand for Nvidia chips. Michael Elias de Td Cowen said the discovery of his team points to “a position of excess potential supply” for Microsoft. Nvidia’s actions fell 4% on Friday.

Microsoft went back on Monday, saying that it was still planning to spend $ 80 billion in infrastructure in 2025.

“While we can follow strategically or adjust our infrastructure in some areas, we will continue to grow strongly in all regions. This allows us to invest and assign resources to the growth areas for our future,” a spokesman told CNBC.

During the last monthMost Nvidia’s key clients promoted large investments. The alphabet is pointing $ 75 billion In capital expenses this year, Goal will spend as much as $ 65 billion And Amazon aims to spend $ 100 billion.

Analysts say that approximately half of the infrastructure capital expenses of AI ends with NVIDIA. Many hyperscalers venture into the AMD GPUs and are developing their own AI chips to reduce their nvidia dependence, but the company has most of the market for the avant -garde files.

Until now, these chips have been used mainly to train the AI models of the New Age, a process that can cost hundreds of millions of dollars. After the AI is developed by companies such as OpenAi, Google and Anthrope, the warehouses full of GPU Nvidia must serve those models to customers. That is why Nvidia projects her income to continue growing.

Another challenge for Nvidia is the appearance of last month of the China Depseek startup, which launched an efficient and “distilled“IA model. It had a high enough yield that suggested that billions of dollars are not needed in the Nvidia GPUs to train and use the avant -garde. That temporarily sank Nvidia’s shares, which makes the company lose almost $ 600 billion in market capitalization.

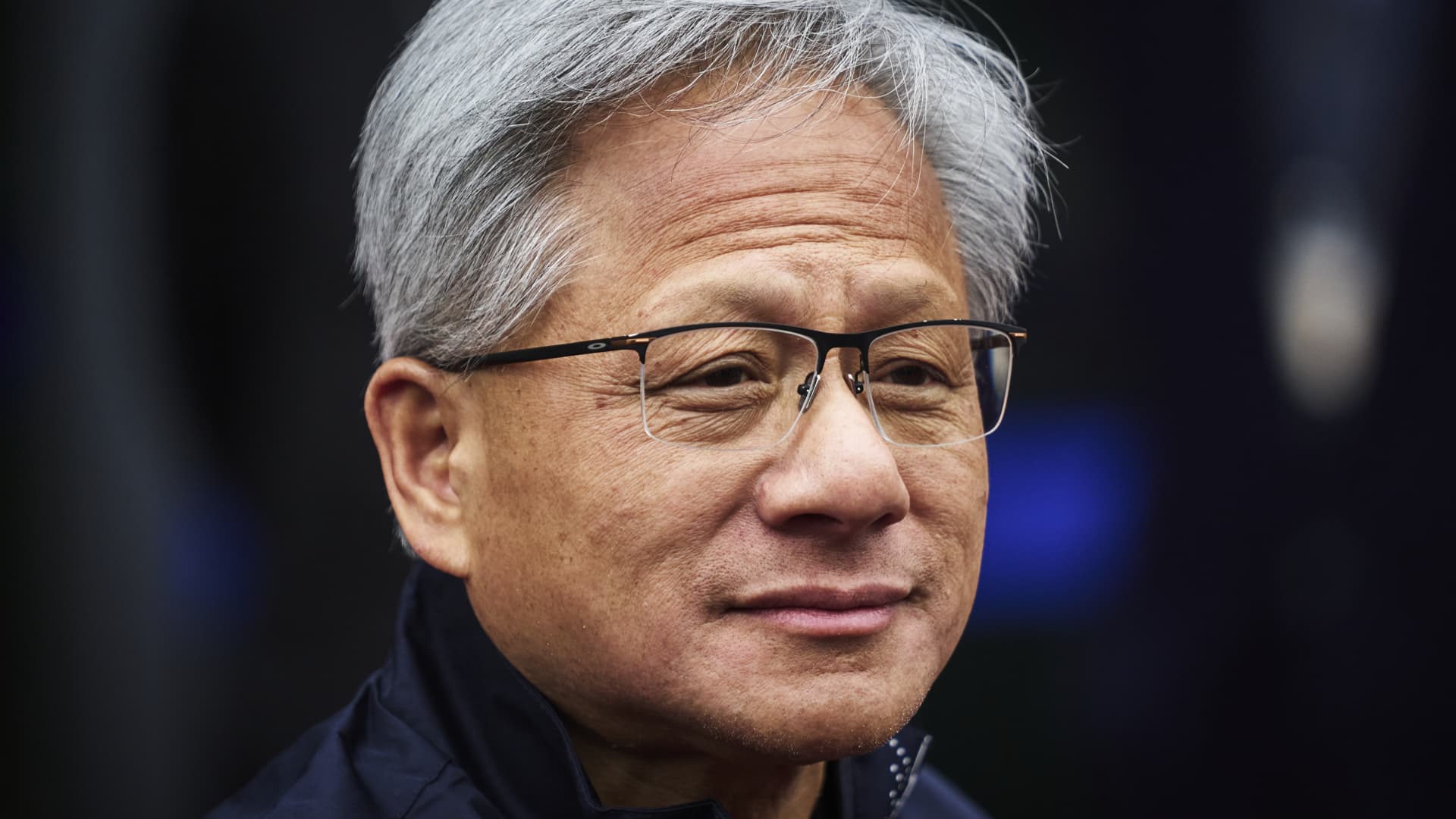

The CEO of Nvidia, Jensen Huang, will have a chance on Wednesday to explain why AI will continue to need even more GPU capacity even after last year’s mass construction.

Recently, Huang has talked about the “Scale Law”, a observation From Operai in 2020, that the AI models improve more data and calculators are used when creating them.

Huang said that Deepseek’s R1 model points to a new wrinkle in the law of scale that Nvidia calls “Test time climbing. “Huang has argued that the next main route for the improvement of AI is to apply more GPU to the IA implementation process, or inference. That allows chatbots to” reason “or generate many data in the process of thinking through a problem .

The AI models are trained only a few times to create and adjust them. But AI models can be called millions of times per month, so the use of more computing in inference will require more NVIDIA chips implemented for customers.

“The market responded to R1 as in ‘Oh, my God, AI is over’, which Ai no longer needs to do more computing,” Huang said in a prestressed Interview last week. “It is exactly the opposite.”